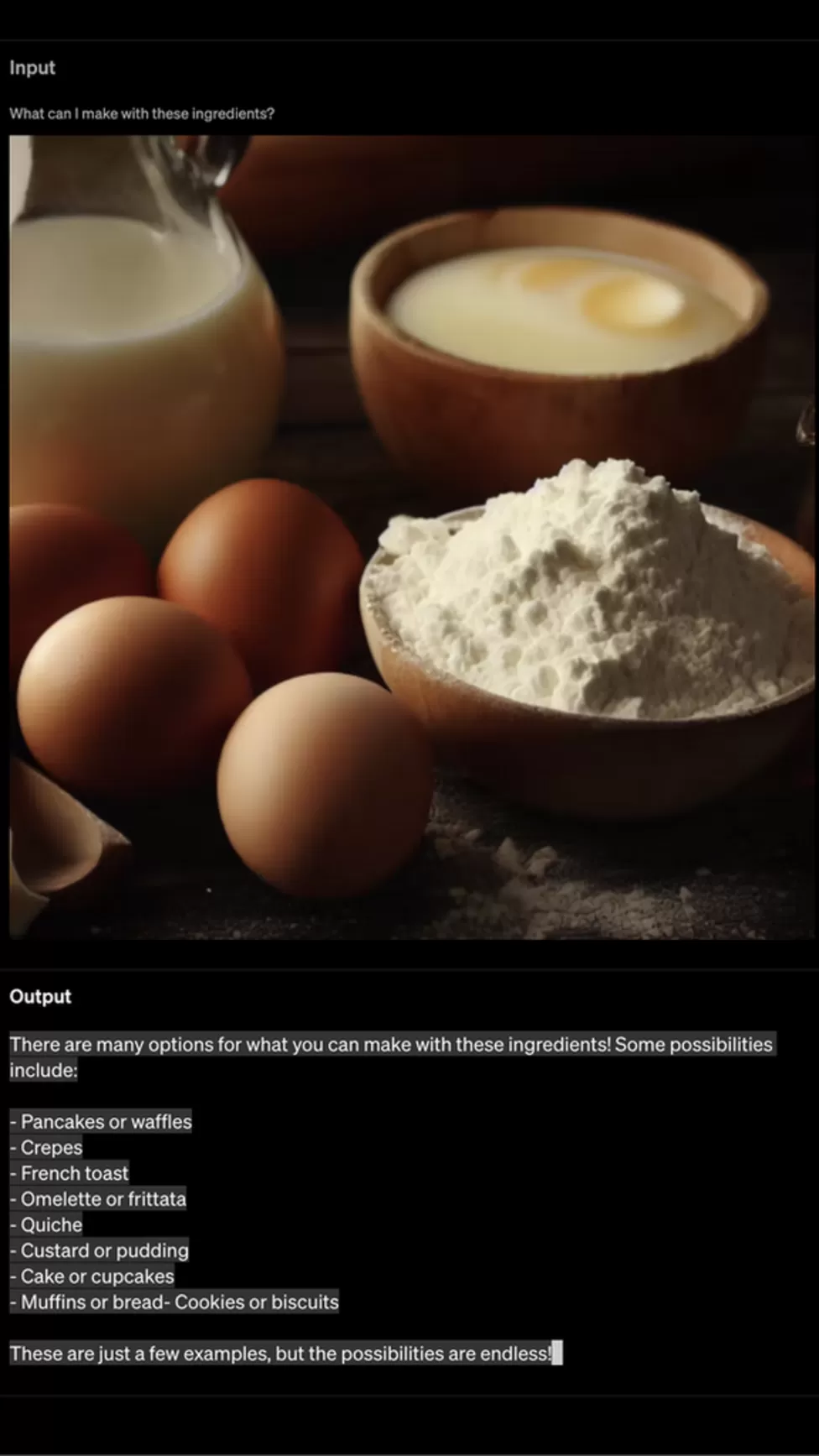

OpenAI has released GPT-4, the latest version of its hugely popular artificial intelligence chatbot ChatGPT. The new model can respond to images – providing recipe suggestions from photos of ingredients, for example, as well as writing captions and descriptions. It can also process up to 25,000 words, about eight times as many as ChatGPT.

Millions of people have used ChatGPT since it launched in November 2022. Popular requests for it include writing songs, poems, marketing copy, computer code, and helping with homework – although teachers say students shouldn’t use it. ChatGPT answers questions using natural human-like language, and it can also mimic other writing styles such as songwriters and authors, using the internet as it was in 2021 as its knowledge database. There are concerns that it could one day take over many jobs currently done by humans.

OpenAI said it had spent six months on safety features for GPT-4, and had trained it on human feedback. However it warned that it may still be prone to sharing disinformation. GPT-4 will initially be available to ChatGPT Plus subscribers, who pay $20 per month for premium access to the service. It’s already powering Microsoft’s Bing search engine platform. The tech giant has invested $10b into OpenAI.

In a live demo it generated an answer to a complicated tax query – although there was no way to verify its answer. GPT-4, like ChatGPT, is a type of generative artificial intelligence. Generative AI uses algorithms and predictive text to create new content based on prompts.

GPT-4 has “more advanced reasoning skills” than ChatGPT, OpenAI said. The model can, for example, find available meeting times for three schedules. OpenAI also announced new partnerships with language learning app Duolingo and Be My Eyes, an application for the visually impaired, to create AI Chatbots which can assist their users using natural language. However, like its predecessors, OpenAI has warned that GPT-4 is still not fully reliable and may “hallucinate” – a phenomenon where AI invents facts or makes reasoning errors.

![]()